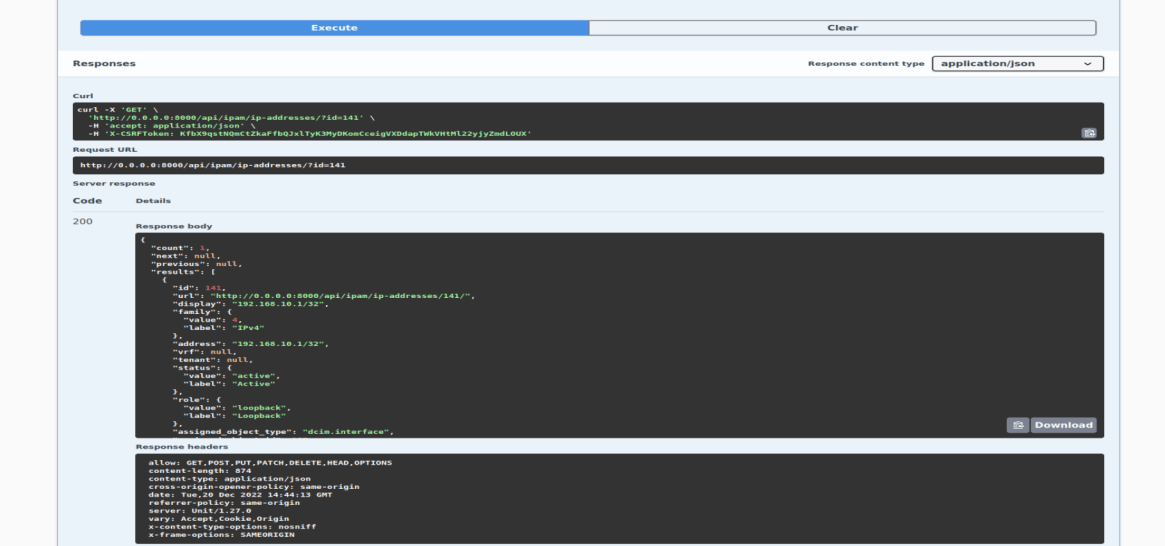

SONiC (Software for Open Networking in the Cloud) emerged from Microsoft’s innovation hub in 2016, designed to transform Azure’s complex cloud infrastructure connectivity. Built on a Debian foundation, SONiC adopts a microservice-driven, containerized design, where core applications operate within independent Docker containers. This separation allows for seamless integration across various platforms. Its northbound interfaces (NBIs)—including gNMI, ReST, SNMP, CLI, and OpenConfig Yang models—facilitate smooth integration with automation systems, providing a robust, scalable, and adaptable networking solution.

Why an Open NOS is the Future

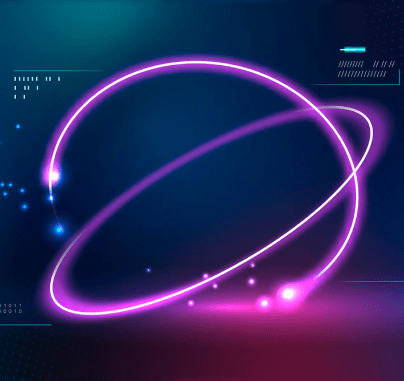

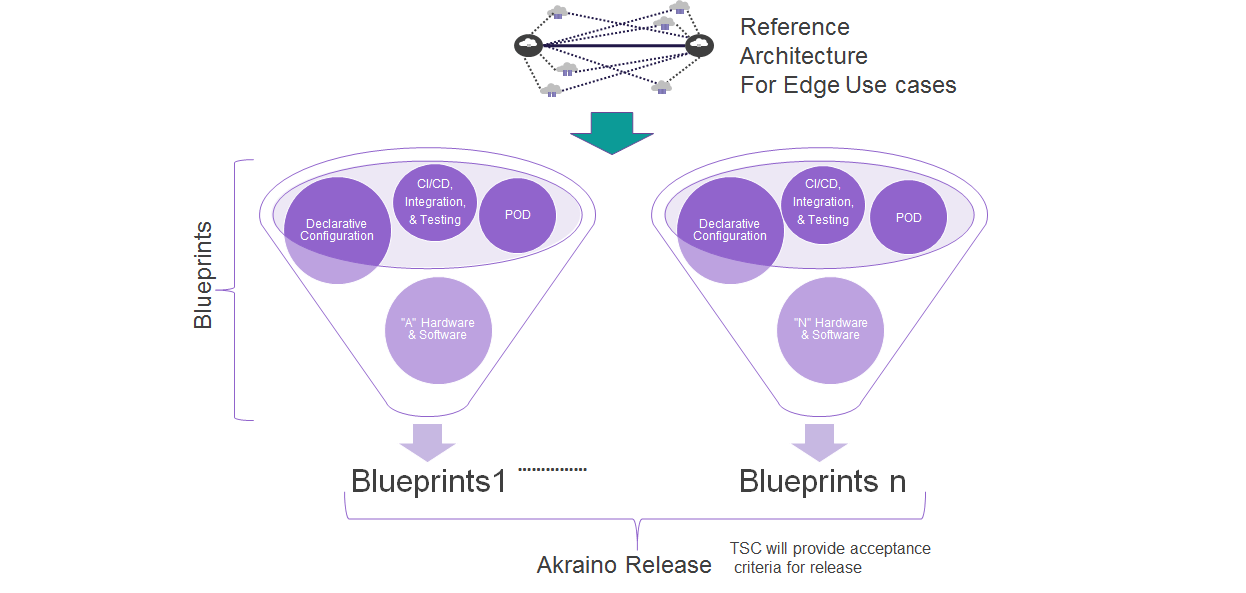

Adopting an open Network Operating System (NOS) like SONiC promotes disaggregation, a concept that liberates hardware from software constraints, allowing for a flexible, plug-and-play approach. This modular structure enables a unified software interface across different hardware architectures, enhancing supply chain flexibility and avoiding vendor lock-in. Custom in-house automation frameworks can be preserved without needing per-vendor adjustments. The DevOps-oriented model of SONiC accelerates feature deployment and rapid bug fixes, reducing dependence on vendor-specific release schedules. Moreover, the open-source ecosystem fosters innovation and broad collaboration, supporting custom use cases across various deployments. This freedom translates into significant cost efficiencies, reducing Total Cost of Ownership (TCO), Operational Expenditure (OpEx), and Capital Expenditure (CapEx), making it a compelling choice for modern networks.

Why SONiC Stands Apart

Among the numerous open-source NOS solutions available, SONiC distinguishes itself through its growing adoption across enterprises, hyperscale data centers, and service providers. This success highlights its versatility and robustness. Open-source contributions have meticulously refined SONiC, tailoring it for specific use cases and enhancing its features while allowing adaptable architectures.

Key Attributes of SONiC:

Open Source:

- Vendor-neutral: Operates on any compatible vendor hardware.

- Accelerated feature deployment: Custom modifications and quick bug resolutions.

- Community-driven: Contributions benefit the broader SONiC ecosystem.

- Cost-effective: Reduces TCO, OpEx, and CapEx significantly.

Disaggregation:

- Modular architecture: Containerized components enhance resilience and simplify functionality.

- Decoupled functionalities: Allows independent customization of software components.

Uniformity:

- Abstracted hardware: SAI simplifies underlying hardware complexities.

- Portability: Ensures consistent performance across diverse hardware environments.

DevOps Integration:

- Automation: Seamless orchestration and monitoring.

- Programmability: Utilizes full ASIC capabilities.

Real-World SONiC Deployment

Despite SONiC’s evident advantages, network operators often has these critical questions- Is SONiC suitable for my network?, What does support entail?, How do I ensure code quality?, and How do I train my team?. The true test of a NOS is its user experience. For open-source solutions like SONiC, success depends on delivering a seamless experience while ensuring robust vendor-backed support.

Operators considering SONiC typically fall into two categories: those with a self-sustaining ecosystem capable of handling an open NOS and those exploring its potential. For the former, SONiC may be customized to meet specific network demands, potentially involving a private distribution or vendor-backed commercial versions. The latter group, often seeking simpler use cases, typically relies on community SONiC, balancing its open-source nature with vendor validation.

SONiC: Leading Disaggregation and Open Networking

The shift towards open networking, driven by disaggregation, has brought SONiC into the spotlight. Its flexibility, cost-effectiveness, and capacity for innovation make it an attractive option, especially for hyperscalers, enterprises, and service providers. By embracing open architecture and standard merchant silicon, SONiC provides a cost-efficient alternative to traditional closed systems, delivering equivalent features and support at a fraction of the price.

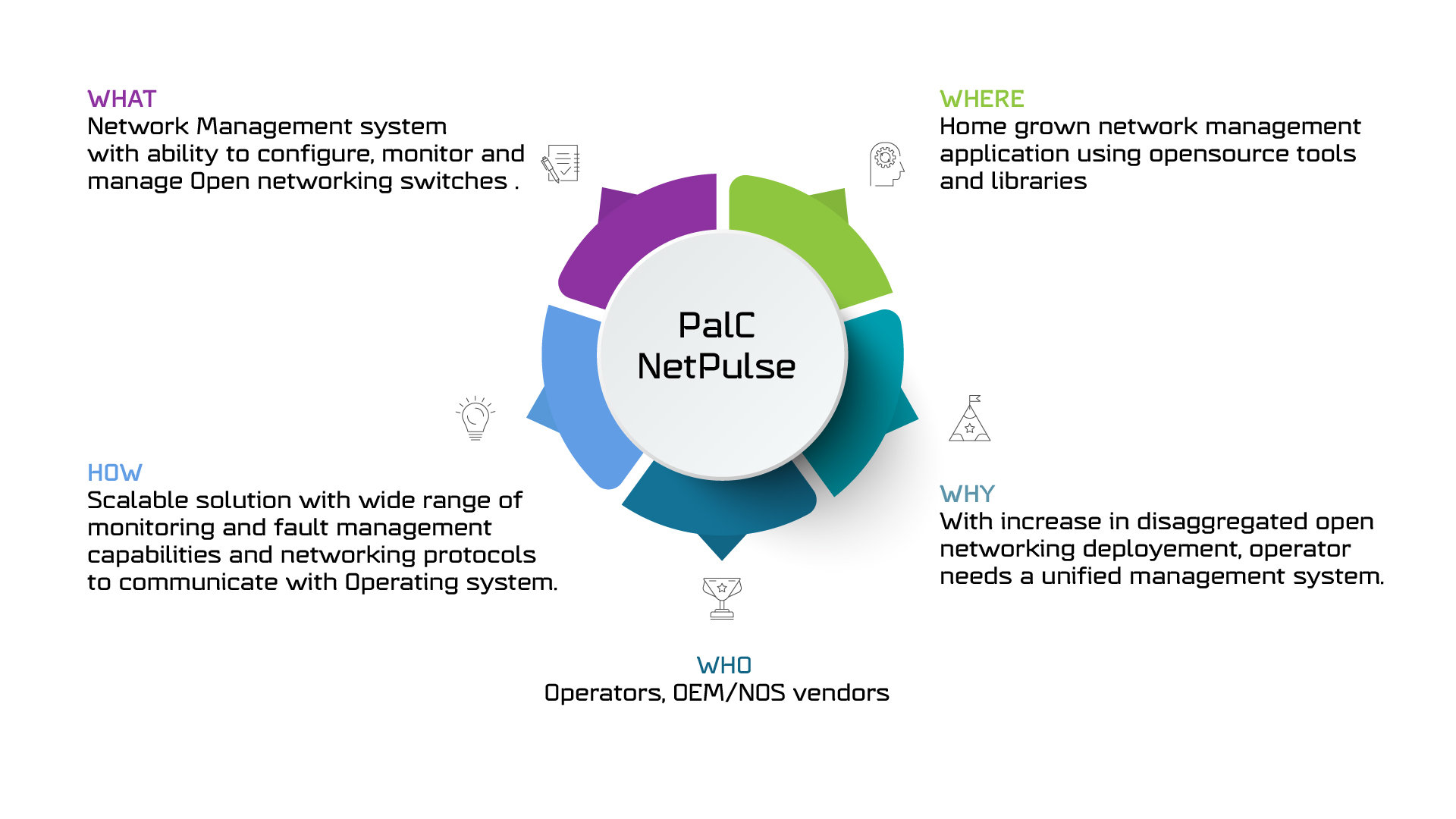

Managing SONiC: Overcoming Deployment Challenges

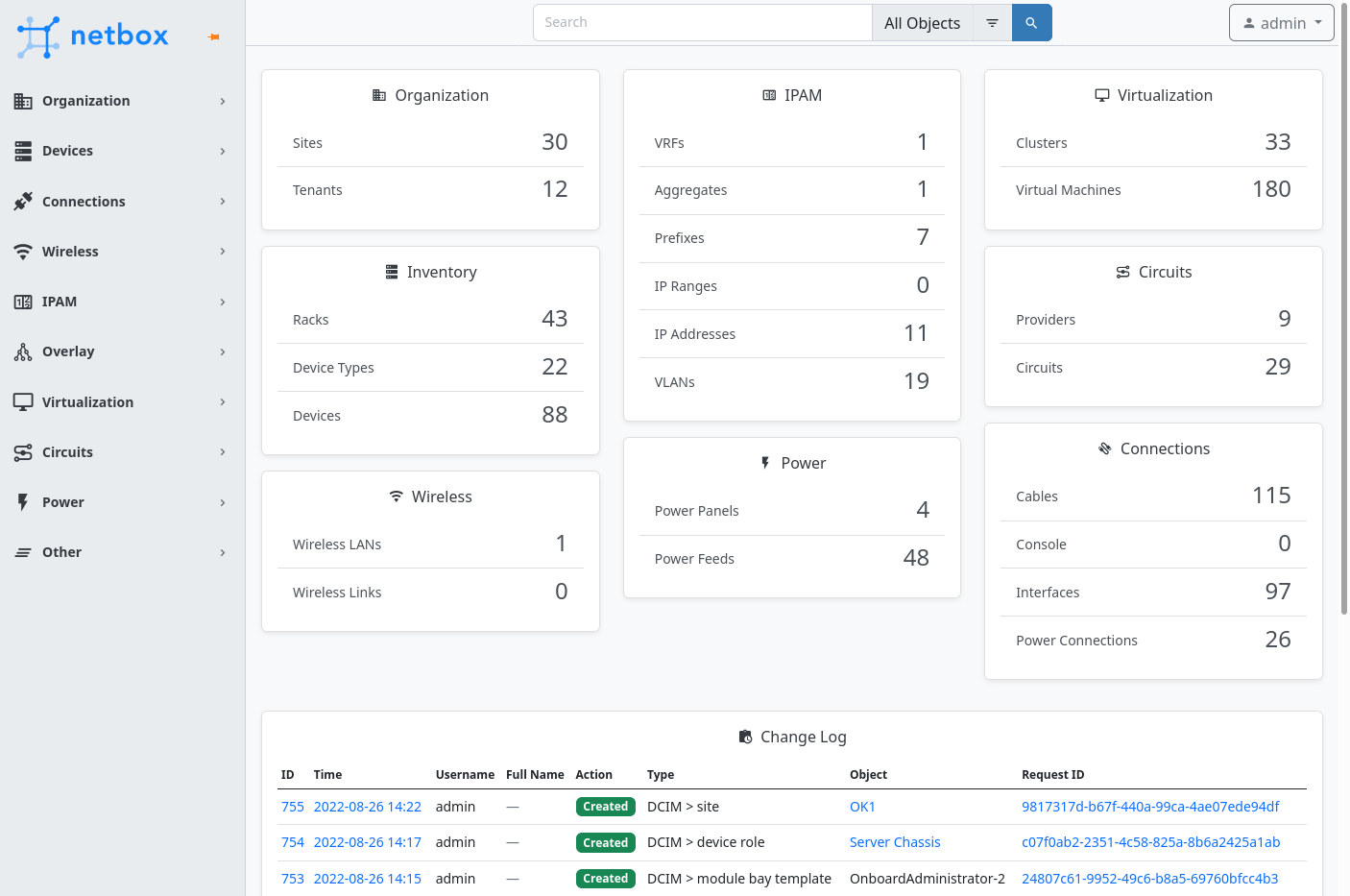

While companies like Microsoft, LinkedIn, and eBay have successfully integrated SONiC, they often encounter challenges such as vendor fragmentation, compatibility issues, and inconsistent support across hardware platforms. Managing diverse hardware can become complex due to each vendor’s unique configurations, while updates can disrupt operations by introducing compatibility problems. Integrating different SONiC versions across vendors may also lead to inconsistencies and unreliable telemetry data, adding further complications. Additionally, varying support levels make troubleshooting difficult, and operators often require additional training to navigate the nuances of each vendor’s implementation. Although these challenges can feel overwhelming, with careful planning, skilled personnel, and the right support, they can be effectively managed for a smooth and successful SONiC deployment.

PalC’s SONiC NetPro Suite directly addresses these challenges by offering comprehensive solutions designed to streamline SONiC adoption. Through the Ready, Deploy, and Sustain packages, PalC ensures end-to-end support, providing expert guidance to fill gaps in in-house expertise and fostering a DevOps-friendly culture for smooth operations. PalC’s strong partnerships with switch and ASIC vendors guarantee seamless integration and full infrastructure support, while its rigorous QA processes ensure performance and reliability. By offering customized support and clear cost assessments, PalC simplifies SONiC deployment, minimizing disruptions and ensuring a scalable, secure, and optimized network infrastructure.

Conclusion

Choosing the right SONiC version is crucial for network optimization. By assessing feature needs, community support, hardware compatibility, and security, organizations can make informed decisions that enhance network performance. SONiC’s evolution from a Microsoft project to a leading open-source NOS underscores its transformative potential and solidifies its role in future cloud networking.